Neural Networks

Content:

Nowadays, more and more people talking about neural networks. Maybe we’ll use it in robotics, in mechanical engineering, and in many other spheres of human activity. For example, the search engine algorithms of Google starting to work with the help of neural networks. What are these neural networks? How do they work? What is their use and how they can be useful for us? We’ll talk about it in our article.

Definition

The neural network is one of the areas of scientific research in the field of creating artificial intelligence (AI), which is based on the desire to imitate the human nervous system (including the ability to correct mistakes and self-study). All of this should allow modeling the work of the human brain.

Biological Network

If we speak in the language of biology, then the neural network is the human nervous system, the totality of neurons in our brain, through which we think, make certain decisions, feel the world around us.

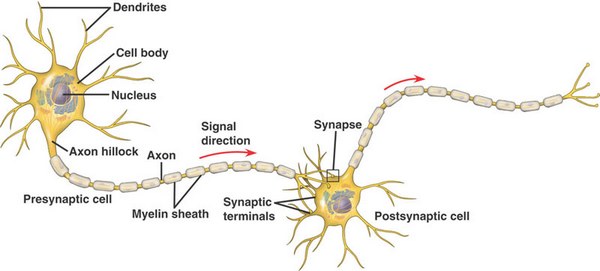

A biological neuron is a special cell consisting of a nucleus, a body, and connectors. Each neuron has a close connection with thousands of other neurons. Electrochemical impulses are transmitted through this connection, causing the entire neural network to be in a state of excitation or vice versa. For example, a pleasant and exciting event (meeting a loved one, winning a competition) will generate an electrochemical impulse in a neural network that is located in our head, which will lead to its excitement. As a result, the neural network in our brain will transmit its excitement to other organs of our body and lead to an increased heartbeat, more frequent blinking of the eyes, etc.

In this picture, you can see a simple model of the biological neural network of the brain. We see that a neuron consists of a cell body and a nucleus. The cell body, in turn, has many branched fibers, called dendrites. Long dendrites are called axons and have a length much bigger than shown in this picture. Axons are responsible for the connection between neurons; the biological neural network works in our heads thanks to them. Now you know the answer to the question “how do neural networks work”.

History

What is the history of the development of neural networks in science and technology? It originates with the advent of the first computers. So back in the late 1940s, Donald Hebb developed a neural network mechanism, which laid down the rules for learning computers. It was the first deep learning experience in history.

Further chronology was as follows:

- In 1954, the first practical use of neural networks in the work of computers.

- In 1958, Frank Rosenblat developed a pattern recognition algorithm and mathematical annotation to it.

- In the 1960s, interest in the development of neural networks faded due to the weak capacities of computers of that time.

- And it was revived again in the 1980s. A system with a feedback mechanism appeared during this period. Self-learning algorithms were developed.

- By 2000, the capacities of computers had grown so much that they could realize the wildest dreams of scientists of the past.

Artificial Network

The artificial neural network is a computing system that has the ability to self-learn and gradually improve its performance. The main elements of the neural network structure are:

- Artificial neurons, which are elementary, interconnected units.

- A synapse is a connection that is used to send and receive information between neurons.

- The signal is the actual information to be transmitted.

Network Applications

The scope of artificial neural networks is expanding every year; today they are used in such areas as:

- Machine learning, which is a kind of artificial intelligence. It is based on learning AI on the example of millions of tasks of the same type. Nowadays, machine learning is actively implemented by Google, Yandex, Bing, Baidu. Based on the millions of search queries that we all enter on Google every day, algorithms learn how to show us the most relevant information, so that we can find exactly what we are looking for.

- Neural networks are used in the development of numerous algorithms for the iron “brains” of robots.

- Architects of computer systems use neural networks to solve the problem of parallel computing.

- Mathematicians can solve various complex mathematical problems with the help of neural networks.

Types of Networks

In general, different types of neural networks are used for different tasks:

- convolutional neural networks,

- recurrent neural networks,

- Hopfield neural network.

Next, we will focus on some of them.

Convolutional Networks

Convolution networks are one of the most popular types of artificial neural networks. So they have proven to be effective in recognizing visual images (video and photo), recommender systems and language processing.

- Convolution neural networks scale well and can be used for pattern recognition.

- These networks use volumetric three-dimensional neurons. Neurons connected only by a small field within a single layer called the receptive layer.

- Neurons of adjacent layers are connected by means of the mechanism of spatial localization. The work of many such layers is provided by special non-linear filters that react to an increasing number of pixels.

Recurrent Networks

Recurrent neural networks have the connections between the neurons in form if an approximate cycle. They have the following characteristics:

- Each connection has its own weight.

- Nodes are divided into two types, introductory nodes, and hidden nodes.

- Information in a recurrent neural network is transmitted not only in a straight line, layer by layer but also between the neurons themselves.

- An important distinguishing feature of the recurrent neural network is the presence of the so-called “area of attention” when the machine can specify certain pieces of data that require enhanced processing.

Recurrent neural networks are used in the recognition and processing of text data (in particular, Google translator, Apple Siri voice assistant work on their basis).

References and Further Reading

- McCulloch, Warren; Walter Pitts (1943). “A Logical Calculus of Ideas Immanent in Nervous Activity”. Bulletin of Mathematical Biophysics. 5 (4): 115–133. doi:10.1007/BF02478259.

- Kleene, S.C. (1956). “Representation of Events in Nerve Nets and Finite Automata”. Annals of Mathematics Studies (34). Princeton University Press. pp. 3–41. Retrieved 17 June 2017.

- Hebb, Donald (1949). The Organization of Behavior. New York: Wiley. ISBN 978-1-135-63190-1.

- Farley, B.G.; W.A. Clark (1954). “Simulation of Self-Organizing Systems by Digital Computer”. IRE Transactions on Information Theory. 4 (4): 76–84. doi:10.1109/TIT.1954.1057468.

- Rochester, N.; J.H. Holland; L.H. Habit; W.L. Duda (1956). “Tests on a cell assembly theory of the action of the brain, using a large digital computer”. IRE Transactions on Information Theory. 2 (3): 80–93. doi:10.1109/TIT.1956.1056810.

Author: Pavlo Chaika, Editor-in-Chief of the journal Poznavayka

When writing this article, I tried to make it as interesting and useful as possible. I would be grateful for any feedback and constructive criticism in the form of comments to the article. You can also write your wish/question/suggestion to my mail pavelchaika1983@gmail.com or to Facebook.